Context Engineering: Building Intelligent Information Logistics for LLMs

Introduction

The AI development landscape has undergone a profound shift. What once focused on crafting perfect individual prompts has evolved into building entire information architectures that surround and empower language models. Context engineering, described by Andrej Karpathy as the careful practice of populating the context window with precisely the right information at exactly the right moment, represents the future of practical AI development.

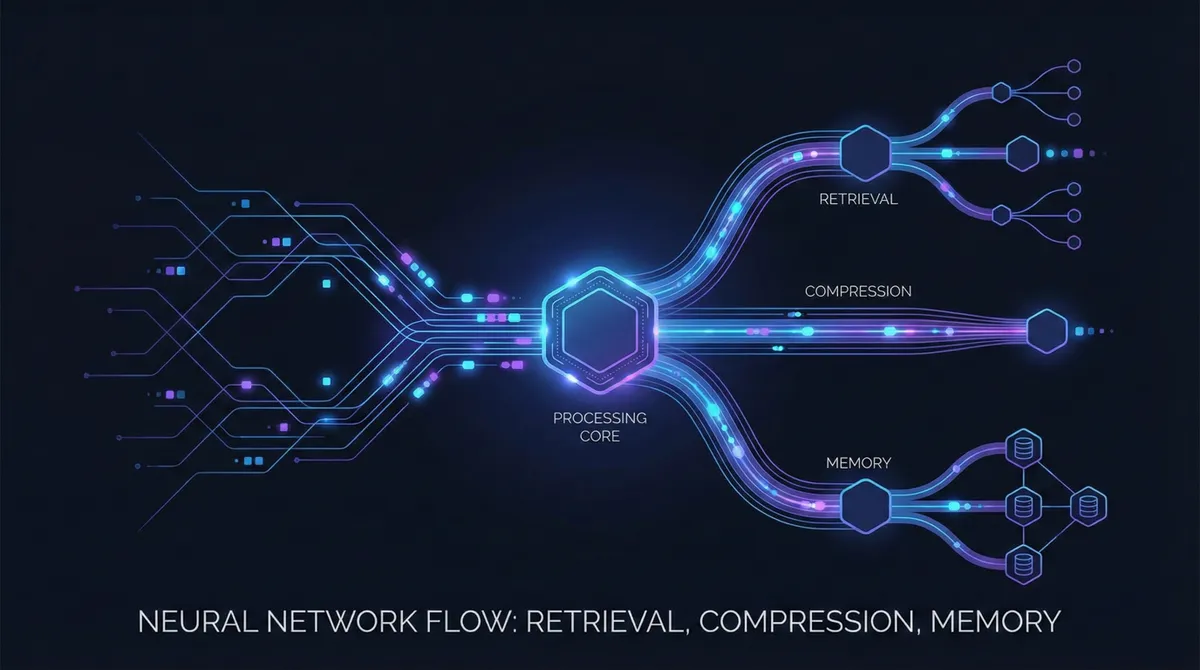

This transformation reflects a fundamental reality: modern LLM applications don’t succeed because of magical prompts—they succeed because engineers have learned to orchestrate comprehensive information ecosystems. Context engineering transcends simple prompt design to encompass the systematic optimization of information payloads for LLMs, treating LLM behavior as an information logistics problem focused on how we retrieve, process, and manage context to maximize reward under strict constraints.

In this article, you’ll learn how context engineering cleanly separates foundational components (retrieval, long-context processing, memory, compression) from system implementations (RAG, memory systems, tool-integrated reasoning, multi-agent orchestration), discover practical techniques for production deployment, and understand the evolution from early RAG systems to today’s modular, stateful, protocol-driven context pipelines.

Prerequisites

To get the most from this article, you should have:

- Basic understanding of large language models and their context windows

- Familiarity with Python and common AI/ML libraries

- Experience with at least one LLM API (OpenAI, Anthropic, or similar)

- Understanding of basic software architecture patterns

- Optional: Knowledge of vector databases and embeddings

Understanding Context Engineering: Beyond Prompt Engineering

Context engineering is emerging as a critical skill for AI engineers in 2025, shifting focus from single prompts to dynamic systems that provide LLMs with the right information and tools to accomplish tasks effectively. Unlike traditional prompt engineering, which focuses on writing effective instructions, context engineering manages the entire information flow—including system instructions, tools, external data, message history, and more.

The Context Window as Working Memory

The LLM’s context window serves as working memory, analogous to RAM in a computer. When ChatGPT launched in late 2022, its context window was limited to 4,000 tokens, but by 2025 the industry standard has reached 128,000 tokens, with cutting-edge models supporting millions of tokens. However, expanded context windows introduce significant challenges rooted in transformer architecture.

Computational cost increases quadratically with context length, creating a practical ceiling on efficient processing. Doubling context length results in four times the computational requirements, directly impacting inference latency and operational costs. More critically, research reveals that LLMs do not maintain consistent performance across input lengths, experiencing degradation through phenomena like context rot and the lost-in-middle effect.

The Information Logistics Framework

Context engineering reframes LLM development as an optimization problem with multiple competing objectives:

Relevance: Ensuring context contains information genuinely useful for the task

Compression: Minimizing tokens while preserving essential information

Latency: Managing retrieval and processing time within acceptable bounds

Cost: Balancing token consumption against budget constraints

Coherence: Maintaining logical consistency across multi-turn interactions

Foundational Components of Context Engineering

Context Retrieval and Generation

Context retrieval encompasses prompt-based generation and external knowledge acquisition, forming the first pillar of effective context engineering.

Retrieval-Augmented Generation (RAG) has become the cornerstone pattern. RAG is an architectural pattern where data sourced from an information retrieval system is provided to an LLM to ground and enhance generated results. According to recent surveys, organizations implementing RAG systems report a 78% improvement in response accuracy for domain-specific queries compared to using vanilla LLMs.

Modern RAG Architecture

Simple RAG rarely survives production. By mid-2024, production systems evolved into sophisticated retrieval pipelines combining hybrid search, cross-encoder reranking, and query transformation.

Hybrid Search: Combining dense semantic retrieval with sparse keyword methods like BM25 addresses both semantic understanding and lexical precision, with Reciprocal Rank Fusion showing 15-30% better retrieval accuracy than pure vector search.

Cross-Encoder Reranking: Cohere Rerank 3.5 demonstrates 23.4% improvement over hybrid search alone on the BEIR benchmark, reducing irrelevant passages from 30-40% to under 10%.

Query Transformation: Hypothetical Document Embeddings (HyDE) generates hypothetical answers using an LLM, then embeds those answers as search queries, showing 20-35% improvement on ambiguous queries.

# Example: Hybrid RAG with reranking

from langchain.retrievers import EnsembleRetriever

from langchain.vectorstores import Pinecone

from langchain.retrievers import BM25Retriever

# Initialize retrievers

vector_retriever = Pinecone.from_documents(

documents, embeddings, index_name="context-index"

).as_retriever(search_kwargs={"k": 10})

bm25_retriever = BM25Retriever.from_documents(documents)

bm25_retriever.k = 10

# Ensemble with RRF

ensemble_retriever = EnsembleRetriever(

retrievers=[vector_retriever, bm25_retriever],

weights=[0.5, 0.5] # Equal weighting

)

# Rerank results

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import CohereRerank

compressor = CohereRerank(model="rerank-english-v3.0", top_n=3)

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor,

base_retriever=ensemble_retriever

)

# Query with optimized pipeline

results = compression_retriever.get_relevant_documents(

"How does context engineering improve LLM performance?"

)Evolution Beyond Naive RAG

The original version of RAG relied on single dense vector search and took top results to feed directly into an LLM. Those implementations almost immediately ran into constraints around relevance, scalability, and noise. This pushed RAG forward into new evolutions: agentic retrieval, GraphRAG, and context engineering.

GraphRAG allows models to reason over entities and relationships rather than flat text chunks. Microsoft open-sourced its GraphRAG framework in July 2024, making graph-based retrieval accessible to wider developer audiences.

Context Processing and Long-Context Handling

As context windows expand, processing efficiency becomes critical. Context processing addresses long sequence handling, self-refinement, and structured information integration.

Compression Techniques

Prompt compression methods like SelectiveContext filter low-information tokens using lexical analysis, while LLMLingua leverages smaller LMs to rank and preserve key tokens, achieving up to 20x shorter prompts suitable for black-box LLMs.

KVzip intelligently compresses conversation memory by eliminating redundant information not essential for reconstructing context, allowing chatbots to retain accuracy while reducing memory size by 3-4 times and speeding up response generation.

Dynamic Memory Compression (DMC) enables models to learn different compression ratios in different heads and layers, achieving up to 7x throughput increase during auto-regressive inference with up to 4x cache compression.

The Context Rot Challenge

Expanding context windows does not guarantee improved model performance; research reveals that as input tokens increase, LLM performance can degrade through context rot, where models struggle to effectively utilize information distributed across extremely long contexts.

Many LLMs exhibit a U-shaped performance curve for information retrieval from context windows, recalling information best from the beginning and end while information buried in the middle is often overlooked.

Memory Systems and Context Management

Context management covers memory hierarchies, compression, and optimization, enabling agents to maintain state across extended interactions.

Memory Types:

Temporal context captures timing, sequence, and duration; spatial context covers locations and conditions; task context involves goals and progress; social context concerns agent roles; domain context involves field knowledge; personal context tracks state and history; interaction context includes references and conversation history.

Implementation Patterns:

LangGraph’s long-term memory supports various retrieval types, including fetching files and embedding-based retrieval on memory collections. Note-taking via scratchpad persists information outside the context window so it’s available to agents, with Anthropic’s multi-agent researcher saving plans to Memory when context exceeds 200,000 tokens.

# Example: Implementing hierarchical memory with LangGraph

from langgraph.prebuilt import MemorySaver

from langgraph.graph import StateGraph

# Define memory store

memory = MemorySaver()

# Define agent state with memory

class AgentState(TypedDict):

messages: list

scratchpad: dict # Working memory

long_term_memory: list # Persistent facts

def retrieve_relevant_memories(state: AgentState, query: str):

"""Retrieve memories relevant to current query"""

# Vector search over stored memories

relevant = vector_search(state["long_term_memory"], query, k=5)

return relevant

def update_memory(state: AgentState, new_info: dict):

"""Update memory hierarchically"""

# Short-term: Add to scratchpad

state["scratchpad"][new_info["key"]] = new_info["value"]

# Long-term: Store important facts

if new_info["importance"] > 0.7:

state["long_term_memory"].append({

"content": new_info["value"],

"timestamp": datetime.now(),

"embedding": get_embedding(new_info["value"])

})

return stateSystem Implementations: From RAG to Multi-Agent Systems

Production RAG Systems

A recent global survey shows that more than 80% of in-house generative AI projects fall short, but production-ready RAG systems require specific architectural decisions.

Data Quality Foundation:

The quality of your RAG system is directly proportional to the quality of your knowledge base. Many teams dump their entire knowledge base into RAG systems, assuming more data equals better results. According to a 2024 survey, poor data cleaning was cited as the primary cause of RAG pipeline failures in 42% of unsuccessful implementations.

Chunking Strategy:

Large chunks are rich in context, which is great for LLM output, but their embeddings can become noisy and averaged out, making it harder to pinpoint relevant information and taking up more space in the context window. Finding the balance between precision and context is key to high-performance RAG.

Continuous Updates:

Production-ready systems need automated refresh pipelines. Build a delta processing system similar to Git diff that only updates what’s changed, rather than reindexing everything.

# Example: Delta-based knowledge base updates

from datetime import datetime

from hashlib import sha256

class DeltaRAGUpdater:

def __init__(self, vector_store, doc_tracker):

self.vector_store = vector_store

self.doc_tracker = doc_tracker # Tracks document versions

def compute_document_hash(self, document):

"""Generate content hash for change detection"""

content = f"{document['id']}:{document['content']}"

return sha256(content.encode()).hexdigest()

def update_knowledge_base(self, new_documents):

"""Only update changed documents"""

updates = []

additions = []

for doc in new_documents:

doc_hash = self.compute_document_hash(doc)

existing = self.doc_tracker.get(doc['id'])

if existing is None:

# New document

additions.append(doc)

elif existing['hash'] != doc_hash:

# Document changed

updates.append(doc)

# Remove old version

self.vector_store.delete(doc['id'])

# Batch process changes

if additions:

self.vector_store.add_documents(additions)

if updates:

self.vector_store.add_documents(updates)

# Update tracker

for doc in additions + updates:

self.doc_tracker[doc['id']] = {

'hash': self.compute_document_hash(doc),

'updated': datetime.now()

}

return len(additions) + len(updates)Multi-Agent Systems and Context Coordination

Multi-agent systems excel in managing extended contexts by dividing long texts among agents, maintaining continuous understanding crucial in industries requiring detailed, context-driven decision-making.

The Disconnected Models Problem:

One of the most critical challenges is the disconnected models problem—the difficulty of maintaining coherent context across multiple agent interactions. Critical vulnerabilities include context management challenges in aligning layered contexts (global, agent-specific, shared knowledge) and lack of frameworks for cross-agent memory access and learning.

Model Context Protocol (MCP):

The Model Context Protocol, introduced by Anthropic in late 2024, addresses fragmented ecosystems by providing a universal standard for connecting AI applications to external data sources and tools, transforming the MxN integration problem into a simpler M + N problem.

MCP was publicly released in late 2024 with comprehensive documentation, SDKs for multiple programming languages, and sample implementations, establishing core architecture and primitives.

# Example: Multi-agent context sharing with MCP

from anthropic import Anthropic

client = Anthropic()

# Agent 1: Research specialist

def research_agent(query, shared_context):

"""Agent specialized in information gathering"""

response = client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=2000,

messages=[

{

"role": "user",

"content": f"""Context from team: {shared_context}

Research task: {query}

Store findings in structured format."""

}

]

)

# Extract and structure findings

findings = parse_research_output(response.content)

return findings

# Agent 2: Synthesis specialist

def synthesis_agent(findings_list, objective):

"""Agent specialized in synthesizing information"""

# Consolidate findings from multiple research agents

consolidated = "\n\n".join([

f"Source {i+1}: {f['summary']}"

for i, f in enumerate(findings_list)

])

response = client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=3000,

messages=[

{

"role": "user",

"content": f"""Synthesize these findings into actionable insights:

{consolidated}

Objective: {objective}"""

}

]

)

return response.content

# Orchestration with context management

def multi_agent_workflow(user_query):

shared_context = {

"objective": user_query,

"findings": [],

"synthesis": None

}

# Phase 1: Parallel research

research_queries = decompose_query(user_query)

findings = [

research_agent(q, shared_context)

for q in research_queries

]

shared_context["findings"] = findings

# Phase 2: Synthesis

synthesis = synthesis_agent(findings, user_query)

shared_context["synthesis"] = synthesis

return synthesisTool-Integrated Reasoning

Tools allow LLMs to perform actions such as automating operations, retrieving data using RAG, or providing information from other sources. However, tool confusion is a significant issue—with many tools, LLMs can be confused and call irrelevant tools.

Tool Selection Optimization:

Research found that Llama 3.1 8B performance improved when provided with fewer tools (19 compared with 46). Optimizing the number of tools available is an open research area.

LangGraph’s Bigtool library applies semantic search over tool descriptions to select the most relevant tools when working with large collections.

Research by Tiantian Gan and Qiyao Sun showed that applying RAG to tool descriptions significantly improves performance, with keeping tool selections under 30 tools giving three times better tool selection accuracy.

Common Pitfalls and Troubleshooting

Context Poisoning and Clash

RAG can improve responses from LLMs, provided retrieved context is relevant to the initial prompt. If context is not relevant, you can get worse results through context poisoning or context clash, where misleading or contradictory information contaminates reasoning.

A Microsoft and Salesforce study showed that sharding information across multiple conversational turns instead of providing everything at once resulted in huge performance drops—an average of 39%, with OpenAI’s o3 model dropping from 98.1 to 64.1.

Mitigation Strategies:

- Implement context pruning to remove outdated or conflicting information

- Use separate reasoning threads for different information sources

- Apply conflict-resolution logic before passing context to the model

- Context offloading, like Anthropic’s think tool, gives models separate workspace to process information without cluttering main context, improving performance by up to 54% in specialized agent benchmarks

Context Overload

Context overload slows systems down and reduces accuracy. Multi-turn conversations, large knowledge bases, and long histories create bloated prompts that models struggle to parse efficiently.

Even if retrieving relevant context, you can overwhelm the model with sheer volume, leading to context confusion or context distraction. Multiple studies show that model accuracy tends to decline beyond a certain context size.

Maximum Effective Context Window

Research findings show that maximum effective context window (MECW) is drastically different from maximum context window (MCW) and shifts based on problem type. A few top models failed with as few as 100 tokens in context; most had severe degradation by 1000 tokens, falling short of their MCW by over 99%.

Best Practices:

- Start small: Begin with minimal context and add only what improves performance

- Monitor metrics: Track retrieval precision, recall, and end-to-end task success

- Implement observability: Use tools like LangSmith or Braintrust for production monitoring

- Test context positions: Place critical information at beginning or end of context

- Use compression wisely: Apply techniques like summarization and token pruning strategically

# Example: Context window monitoring and optimization

class ContextMonitor:

def __init__(self, max_tokens=4000, warning_threshold=0.8):

self.max_tokens = max_tokens

self.warning_threshold = warning_threshold

self.metrics = []

def check_context_health(self, context_parts):

"""Monitor context composition and health"""

total_tokens = sum(count_tokens(part) for part in context_parts)

utilization = total_tokens / self.max_tokens

analysis = {

"total_tokens": total_tokens,

"utilization": utilization,

"parts_count": len(context_parts),

"avg_part_size": total_tokens / len(context_parts),

"warning": utilization > self.warning_threshold

}

if analysis["warning"]:

# Trigger compression strategies

analysis["recommendation"] = "COMPRESS"

analysis["suggested_actions"] = [

"Apply summarization to oldest messages",

"Remove redundant information",

"Switch to hybrid retrieval with smaller k"

]

self.metrics.append(analysis)

return analysis

def optimize_context(self, context_parts, priority_scores):

"""Intelligently trim context based on priorities"""

# Sort by priority (higher = more important)

sorted_parts = sorted(

zip(context_parts, priority_scores),

key=lambda x: x[1],

reverse=True

)

# Greedily select until hitting threshold

selected = []

tokens_used = 0

target = int(self.max_tokens * self.warning_threshold)

for part, score in sorted_parts:

part_tokens = count_tokens(part)

if tokens_used + part_tokens <= target:

selected.append(part)

tokens_used += part_tokens

else:

# Try compression

compressed = summarize(part, target_ratio=0.5)

compressed_tokens = count_tokens(compressed)

if tokens_used + compressed_tokens <= target:

selected.append(compressed)

tokens_used += compressed_tokens

return selected, tokens_usedAdvanced Patterns and Future Directions

Agentic RAG

The most dynamic aspect of LLM applications in 2025 has been agentic RAG, which uses agent planning and reflection to enhance the RAG process itself. Rather than static retrieval, agents can iteratively refine queries, validate retrieved content, and adjust retrieval strategies based on intermediate results.

Context Engineering with Reinforcement Learning

ACON introduces a guideline optimization pipeline that refines compressor prompts via failure analysis in natural language space, ensuring critical environment-specific and task-relevant information is retained. The system lowers memory usage by 26-54% in peak tokens while maintaining task performance and enables small LMs to achieve 20-46% performance improvements.

Cognitive Architectures

Rather than hand-engineering context management, systems could learn optimal context selection strategies through meta-learning or reinforcement learning. Recent research suggests LLMs develop emergent symbolic processing mechanisms that could enable more sophisticated context abstraction.

Conclusion

Context engineering represents a fundamental shift in AI development—from crafting prompts to orchestrating information ecosystems. The evolution from early RAG systems to today’s multi-agent, tool-using, graph-enhanced implementations demonstrates a clear trajectory toward modular, stateful, and protocol-driven architectures.

Success in production requires understanding the taxonomy: foundational components (retrieval, processing, memory) combine into system implementations (RAG pipelines, multi-agent systems, tool-integrated reasoning). Each layer can be optimized independently while contributing to the whole.

Key Takeaways:

- Context windows are finite resources requiring careful engineering

- Hybrid approaches (vector + keyword search, reranking, compression) outperform single techniques

- Multi-agent systems solve the context overload problem through specialization

- Standardized protocols like MCP reduce integration complexity

- Production success demands observability, evaluation, and continuous optimization

Next Steps:

- Implement hybrid RAG with reranking for your domain

- Experiment with memory hierarchies for long-running agents

- Adopt MCP for tool integrations

- Build comprehensive evaluation and monitoring pipelines

- Explore agentic patterns for adaptive retrieval

The field continues evolving rapidly. While techniques will change, the core principle remains: intelligently curating what information enters the model’s attention budget at each step determines success. Context engineering isn’t just about making LLMs work—it’s about building systems that think, remember, and reason over time.

References:

- LangChain Blog - Context Engineering for Agents - https://blog.langchain.com/context-engineering-for-agents/ - Comprehensive overview of context strategies for agent systems

- Elastic Labs - Context Engineering Overview - https://www.elastic.co/search-labs/blog/context-engineering-overview - Production context engineering patterns with RAG and tools

- Weaviate - Context Engineering Guide - https://weaviate.io/blog/context-engineering - Deep dive on memory, retrieval, and MCP

- DataCamp - Context Engineering Examples - https://www.datacamp.com/blog/context-engineering - Practical examples and common pitfalls

- arXiv - A Survey of Context Engineering for Large Language Models - https://arxiv.org/html/2507.13334v1 - Formal taxonomy and theoretical foundations

- Anthropic Research - Advancing Multi-Agent Systems Through MCP - https://arxiv.org/html/2504.21030v1 - MCP architecture and multi-agent coordination

- Applied AI - Enterprise RAG Architecture - https://www.applied-ai.com/briefings/enterprise-rag-architecture/ - Production RAG patterns and performance optimization